From pilots to production: agentic AI architectures for banking in 2026

Most banks are now running some kind of generative AI pilot. Very few have anything resembling a production-scale AI operating layer.

The FinTech Futures piece sponsored by Oracle Financial Services argues that 2026 is when that gap starts to close, with fleets of AI agents sitting above existing banking systems, orchestrating onboarding, servicing, fraud and operations as if they were digital colleagues rather than one-off chatbots. (fintechfutures.com)

At nocodecreative.io, this is exactly the sort of architecture we design and implement using Azure, Power Platform and n8n for banks, fintechs and other regulated firms that want more than a demo.

In this guide, we take that vision and make it practical: an agent fabric you can actually build, how it fits on top of your current cores, and a realistic roadmap for SMEs and mid-market banks.

Why 2026 is the tipping point for AI agents in financial services

Several threads are converging at once to transform the landscape.

First, major vendors are now shipping opinionated platforms for AI agents rather than just models. Oracle’s OCI Generative AI Agents platform, generally available from March 2025, provides a managed environment for building, deploying and governing enterprise AI agents, with features for orchestration, tool calling and lifecycle management. (blogs.oracle.com) Microsoft, Google and others are pushing similar ideas in their own stacks.

Second, the banking conversation has shifted from “should we experiment with AI?” to “how do we operationalise AI safely at scale?”. The FinTech Futures article on banking in 2026 positions AI agents as a new operating layer that coordinates customer-facing experiences and domain workflows across onboarding, lending, payments and servicing. (fintechfutures.com)

Third, real banks are moving. Eurobank, for example, is partnering with Fairfax Digital Services, EY and Microsoft to build an “AI Factory” on Azure that embeds agentic AI into core banking operations rather than sitting in side projects. (fintechfutures.com)

Finally, regulation is catching up. Supervisors like the FCA and PRA in the UK, plus EU regulators, are moving from general AI principles towards concrete guidance on explainability, model risk governance and data access controls. That pushes banks toward architectures where AI behaviour is observable, auditable and policy-driven by design.

Taken together, 2026 looks less like a moment for flashy demos, and more like the point where AI becomes another piece of critical infrastructure.

From chatbots to an AI operating layer: customer-facing vs domain-specific agents

Most banks’ “AI” today is a chatbot glued to a FAQ. The agentic model is fundamentally different because it separates the interface from the execution.

- Customer-facing agents handle conversations in channels such as web chat, mobile app, WhatsApp, Teams, voice or contact centre desktops.

- Domain-specific agents specialise in concrete tasks such as KYC, payments investigations, credit underwriting, collections, treasury operations or fraud.

Customer-facing agents collect intent and context, then delegate to domain agents that call APIs and tools, apply policy and draft decisions. Those domain agents may also talk to each other.

Platforms like OCI AI Agent Platform capture this pattern natively: they support multi-turn conversations, context retention, orchestration of multiple tools and services, retrieval augmented generation (RAG) over enterprise data and human-in-the-loop controls for sensitive actions. (oracle.com)

In Practice:

- A customer agent in the mobile app might detect that a payment failed, summarise the issue and ask the “payments investigations” agent to triage it.

- The payments agent enriches the case with KYC data, sanctions hits and system logs, then suggests next actions to a human analyst in a case management UI.

- A lending agent keeps track of documentation gaps across a loan portfolio, nudging customers and relationship managers with next best actions.

Think of this as an AI operating layer that coordinates work between humans, systems and models, rather than a monolithic bot that tries to do everything.

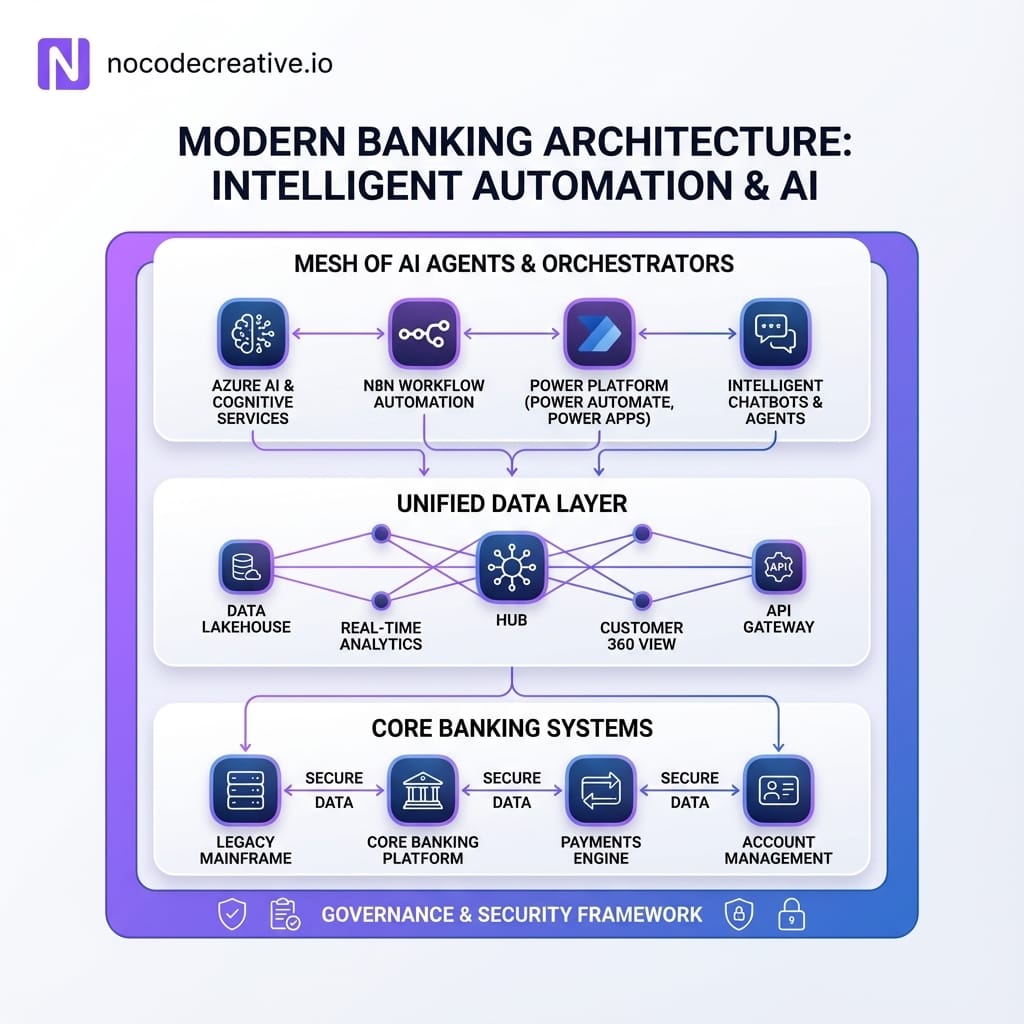

The reference architecture: thin core, unified real-time data and an agent fabric

The FinTech Futures and Oracle content describes a banking architecture that many mid-market banks can realistically adopt without ripping out their core. You can think in three layers:

1. Core systems that stay “thin”

Core banking, cards, payments, treasury, CRM and risk engines continue to own the golden record and transactional integrity. Over time, these are made “thinner”. More product logic and experience logic move out into the agent layer and adjacent services, while the core focuses on contracts, balances, postings, accounting and regulatory reporting.

Access is exposed through API gateways (like Azure API Management, Kong, Apigee) and Event streams (Azure Event Hubs, Kafka, AWS MSK) that publish important business events in near real time. Even file or batch integration can be wrapped in n8n or Logic Apps flows to make it event-like.

2. A unified, governed data layer

AI agents need consistent, timely and trustworthy data. A practical pattern we use with clients is:

- A central data platform such as Azure Data Lake or Microsoft Fabric for curated data and “agent memory” with strong governance.

- Event streams feeding operational data into that platform.

- A vector store (e.g., Azure AI Search) that indexes documents, product terms, policies and case history for RAG-style querying.

3. An agent fabric and orchestration layer

On top of data and APIs you add the intelligence layer:

- One or more agent runtimes such as OCI AI Agent Platform, Azure OpenAI with tool calling, or other enterprise agent frameworks.

- Workflow orchestrators such as n8n, Azure Logic Apps or Power Automate that handle sequencing, retries, error handling and integration with legacy services.

- Channel adaptors for web, mobile, contact centre, Microsoft Teams and internal line of business apps.

This is where n8n shines. It becomes the connective tissue that listens to events from cores, calls AI agents with the right context, invokes downstream APIs based on agent tool calls, and persists audit trails.

Governance by design with human-in-the-loop

Enterprise AI agents that touch money need guardrails as a first-class concern, not an afterthought. Translating vendor capabilities into a neutral blueprint involves several key principles:

Least privilege data access

Agents assume identities (for example managed identities in Azure) and use policy-based access to data and tools. You never hard-code keys inside prompts.

Explicit capabilities

Each agent can only call a defined set of tools. n8n workflows or Logic Apps become the trusted execution boundary that decides which API calls are actually made on the agent’s behalf.

Human approval for material actions

Anything that moves money, changes limits, approves credit or alters sensitive data is drafted by an agent and then approved by a human through a clear UI. The agent cannot “self approve”.

Full audit trails

Every step is logged: prompts, retrieved context, tools invoked, decisions suggested, human overrides and final outcomes. This makes life easier for internal audit, model risk and external regulators.

Policy versioning and explainability

Business policies and risk appetites live in configurable rules, not scattered across prompts. When an agent declines an application or escalates an alert, it explains why in terms a supervisor can inspect.

Pattern 1: Hyper-personalised customer engagement agents

The first pattern many banks pursue is better digital engagement. A customer engagement agent typically sits across web, mobile, contact centre, chat and possibly in-app Microsoft Copilot experiences. It pulls a 360-degree view of the customer, uses RAG to ground recommendations, and suggests next best actions.

An example n8n workflow might:

- Listen for an event such as “high-value payment failed”, “card declined three times” or “loan maturity approaching”.

- Fetch context from core systems, CRM and recent service tickets.

- Call a domain agent that drafts an explanation and recommended options.

- Hand that content into the appropriate channel bot (mobile in-app assistant, web chat or agent assist in the call centre).

Where you are already invested in Microsoft Dynamics and Power Platform, this can complement native Copilot experiences rather than compete with them.

Pattern 2: Onboarding and KYC domain agents

Onboarding and KYC is a sweet spot for early value. The vision is very achievable with current tools:

- A front-door conversational agent (Power Virtual Agents, Azure OpenAI on your website, a Teams bot) gathers data and documents.

- n8n or Power Automate orchestrates calls to identity verification APIs, credit bureaus, sanctions lists, and your CRM.

- Domain agents take on specific jobs such as document classification, data extraction, risk scoring against policy, and generating human-readable decision summaries.

Behind the scenes, all documents and decisions are written to a governed data store like Azure Data Lake or Fabric. High-risk cases are routed to human underwriters with a clean summary. Outcomes feed back into the agent’s retrieval corpus so future decisions improve.

Need a partner? If you want to design that onboarding fabric without locking into a single vendor, our team’s work on AI automation and agent services is a good starting point.

Pattern 3: Financial crime and fraud investigation agents

Financial crime is data and investigation heavy, making it ideal territory for domain-specific agents. A practical pattern includes:

Alert triage agent

When a sanctions or transaction monitoring system raises an alert, an agent automatically gathers KYC data, related transactions, counterparties, device fingerprints and historical cases.

Contextual investigator copilot

Inside your case management tool, an embedded agent summarises the situation, highlights anomalies and proposes lines of inquiry. It drafts SAR narratives or memos for analyst review.

Payments investigation hub

For real-time payment failures or suspicious activity, n8n or Logic Apps ingest events, kick off domain agents to analyse the flow and push a summarised case into Teams or a Power App for human resolution.

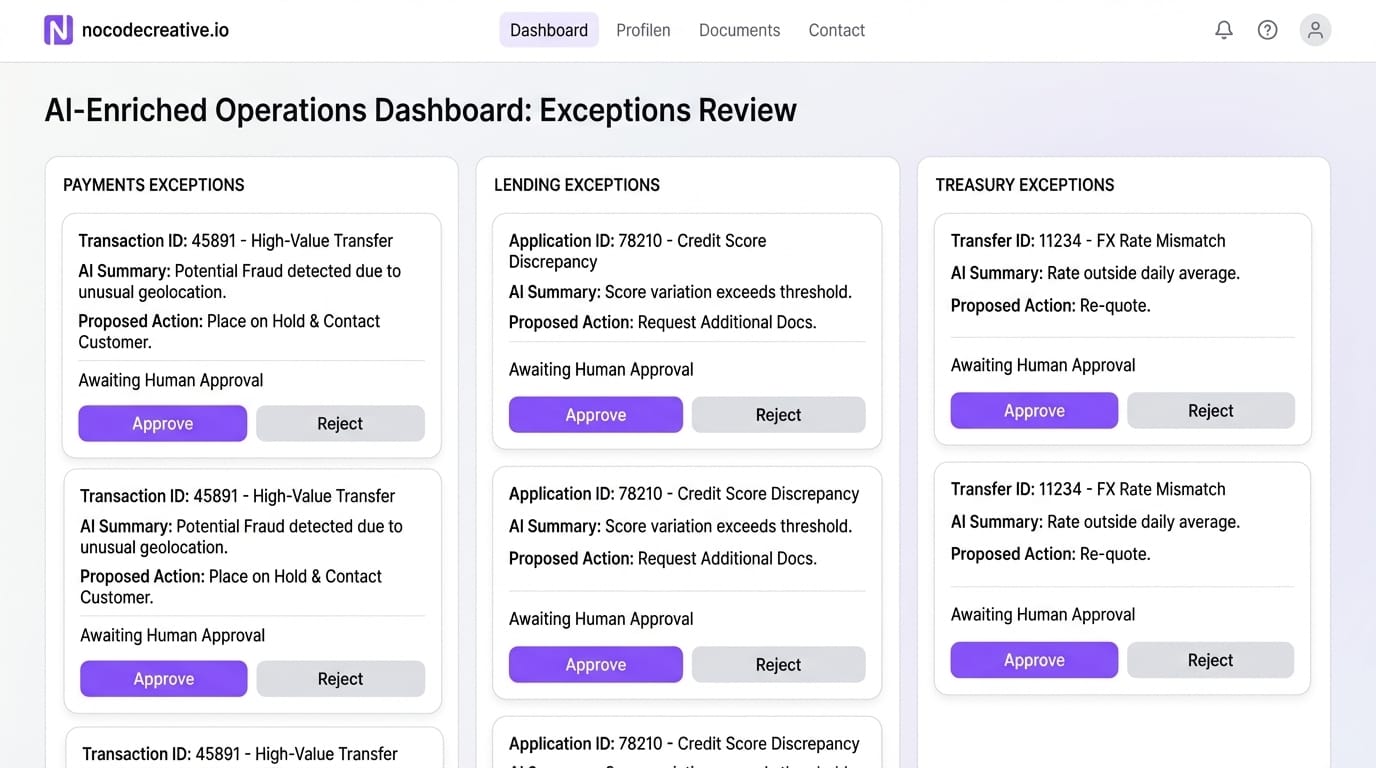

Pattern 4: Back-office exception handling mesh

Most banks leak time and money through operational exceptions. A back-office exception mesh uses an event stream of exceptions from lending, treasury, and payments systems.

A set of domain agents dedicated to classes of exception (like “settlement break” or “missing KYC document”) are orchestrated by n8n or Logic Apps. These route new exceptions to the right agent, collect evidence, and draft remediation steps. A Power Apps or web dashboard then shows operations teams a single queue of AI-enriched exceptions.

Building on what you have

The key to making this work in the real world is layering, not replacing. A pragmatic migration pattern looks like this:

- Wrap your existing cores with API gateways and event publishers.

- Introduce a central tool catalogue inside your agent fabric: each tool corresponds to a well-defined API or workflow.

- Keep your existing workflow engines and integration buses, but expose them as tools agents can call.

- Start with read-heavy use cases where agents are advisory, then move to write actions that still require human confirmation.

Implementation roadmap

Trying to “do agentic AI” everywhere at once is a good way to stall. A phased roadmap works better.

Phase 1: 0-90 days

Focus on clarifying 2 or 3 high-value journeys (e.g., SME onboarding, payments investigations). Stand up a baseline stack (Azure/OCI, API gateway, n8n/Logic Apps) and design a minimum viable guardrail model. Ship one production-pilot in a low-risk domain.

Phase 2: 3-12 months

Consolidate early experiments into a governed agent platform. Add 2 or 3 more domain agents to see cross-domain learning. Enhance your data platform and RAG capabilities, and begin change programmes so product owners know how to frame work as agent workflows.

Phase 3: 12-24 months

Treat the agent fabric as standard infrastructure. Maintain an internal catalogue of agents and tools with SLAs. Run continuous evaluation of agent performance and integrate AI agents into planning cycles and recovery playbooks.

How our team implements this

At nocodecreative.io we typically approach agentic architectures in four stages.

- Discovery and architecture

We map your existing cores, data flows and integration points. We then design a reference architecture for your agent fabric that fits your constraints. - Governed platform setup

We implement the basic platform components: API Management, Event Hubs, Azure Data Lake, n8n clusters, and security infrastructure. - First wave of agents and workflows

Together with your teams we build the first few high-value workflows, aligning them with model risk and compliance requirements. - Enablement and handover

We equip your teams to build their own workflows and prompts safely, with reusable templates and pattern libraries.

Key risks: data quality, access control and organisational change

None of this is free of risk. The main failure modes we see are predictable and manageable.

- Data quality and lineage: If records are inconsistent, agents will hallucinate or error. Mitigation: choose early use cases that tolerate fuzziness and instrument agents to surface uncertainty.

- Excessive access scopes: Giving agents broad access is dangerous. Keep tool scopes narrow and route sensitive operations through governed n8n workflows.

- Shadow AI and pilot sprawl: Avoid disconnected bots. A central agent fabric gives you a place to attach standards without suffocating innovation.

- Change fatigue: Staff need to understand agents are collaborators. Training and humane interfaces are critical.

Keeping your 2026 AI roadmap honest

AI agents at production scale are no longer science fiction. The organisations that benefit in 2026 will be the ones that treat this as an operating model shift and invest early in the data, governance and orchestration foundations.

If you would like help turning these patterns into concrete architectures, n8n workflows and Power Platform solutions in your own bank or fintech, the team at nocodecreative.io does exactly this kind of work across financial services and other regulated sectors.

Get in touch to discuss your automation needs

References

- Banking in 2026: Production scale AI agents (FinTech Futures)

- Overview of OCI Generative AI Agents Service (Oracle Cloud)

- Generative AI Agents Features (Oracle Product Page)

- Announcing OCI Generative AI Agents Platform (Oracle Blog)

- Eurobank to implement Agentic AI (FinTech Futures)